Classification

Now we will predict whether one client will buy a bike or not based on certain demographic features. The method we’ll use is called DECISION TREE. Decision tress are algorithms of supervised classification, which seek to predict a specific dependent variable (BikeBuyer in this case). Both independent and depedendent variables can be either qualitative or quantitive. If the dependent variable is numerical, we call it “Regression Tree”; but in our case, where the variable is categorical (even if we represent it with O and 1), then we call it CLASSIFICATION TREE. And the end, we’ll compare it to the logistic regression model, which is used to predict binary variables too.

I. Let’s load the libraries and dataset.

Here the new ones are the rpart and rpart.plot libraries used for the creationg of the classification model and graphing the decision tree respectively.

library(readr) #to read in the csv

library(dbplyr) #for data wrangling

library(tidyverse) #for data wrangling, for example the function "select"

library(rpart) #decision tree algorithm

library(rpart.plot) #graph the decision treeII. Data Wrangle

The data wrangling part we can see it in the other sections (Regression or Exploratory Data Analysis). The only exception is that we added the column of Bike Buyer(which has 1 if the customer bought a bike, or a 0 if they didn’t) to the data_qualitative subset. That’s the most important column for this part of the analysis.

customer <- read_csv("~/CS 499 Senior Project/datasets/AdvWorksCusts.csv")

spend <- read_csv("~/CS 499 Senior Project/datasets/AW_AveMonthSpend.csv")

bikebuyer <- read_csv("~/CS 499 Senior Project/datasets/AW_BikeBuyer.csv")

three_datasets <- data.frame(customer, spend, bikebuyer)

data_clean <- select(three_datasets,-c(CustomerID.1, CustomerID.2))

missing_values <- sapply(data_clean, function(x) sum(is.na(x))) #it checks number of missing values by column

data_clean <- select(three_datasets,-c(CustomerID.1, CustomerID.2, Title, MiddleName, Suffix, AddressLine2))

data_clean <- data_clean[!duplicated(data_clean), ] #it removes duplicates

###Let's add up age column using the existing DOB column

#Change BirthDate from Character to Date format

data_clean$BirthDate <- as.Date(data_clean$BirthDate, format = "%m/%d/%Y")

#Append the new column called Age

data_clean$Age <- as.numeric(difftime("1998-01-01",data_clean$BirthDate, units = "weeks"))/52.25

data_qualitative <- data_clean %>% select(11:16,21)

data_quantitative <- data_clean %>% select(c(22,17:20))

features <- cbind(data_quantitative,data_qualitative)

#plot(data_quantitative)We’ll have to select some features, the ones that correlate the most with the dependent variable. I think it’s easier if we do that separating the independent variables between categorical and numerical. When you plot numerical variables, we can see some correlation with age and average month spent, so we’ll keep those, and get rid of the rest. As for the qualitative variables, we could do some visualizations or Chi Squared test, and explore the possible correlations. For now, let’s just use all possible explanatory variables and then “prune” our decision tree.

III. Now split our train/test set

We’ll divide our dataset this way: 60% train and 40% test. In the first line, we just create an index that we’ll assign 1’s and 2’s to each row, with aprobabibloty of 60/40 %, which in turns gives a vector that we’ll use as “dictionary” to tell which rows will be train and which will be test.

ind <- sample(2, nrow(features), replace = TRUE, prob = c(0.6,0.4)) #60% training, 40% test

trainData <- features[ind == 1, ] #training

testData <- features[ind == 2, ] # test IV. Creation of the Decision Tree

We input the dependent variable, and we’ll explain it with a rest of the the variables which is why we leave the space after the Bike buyer, in blank. here “method” indicated that our variable to be predicted is categorical

tree <- rpart(BikeBuyer ~ ., method = 'class', data = trainData)V. Checking tree and results

print(tree)## n= 9778

##

## node), split, n, loss, yval, (yprob)

## * denotes terminal node

##

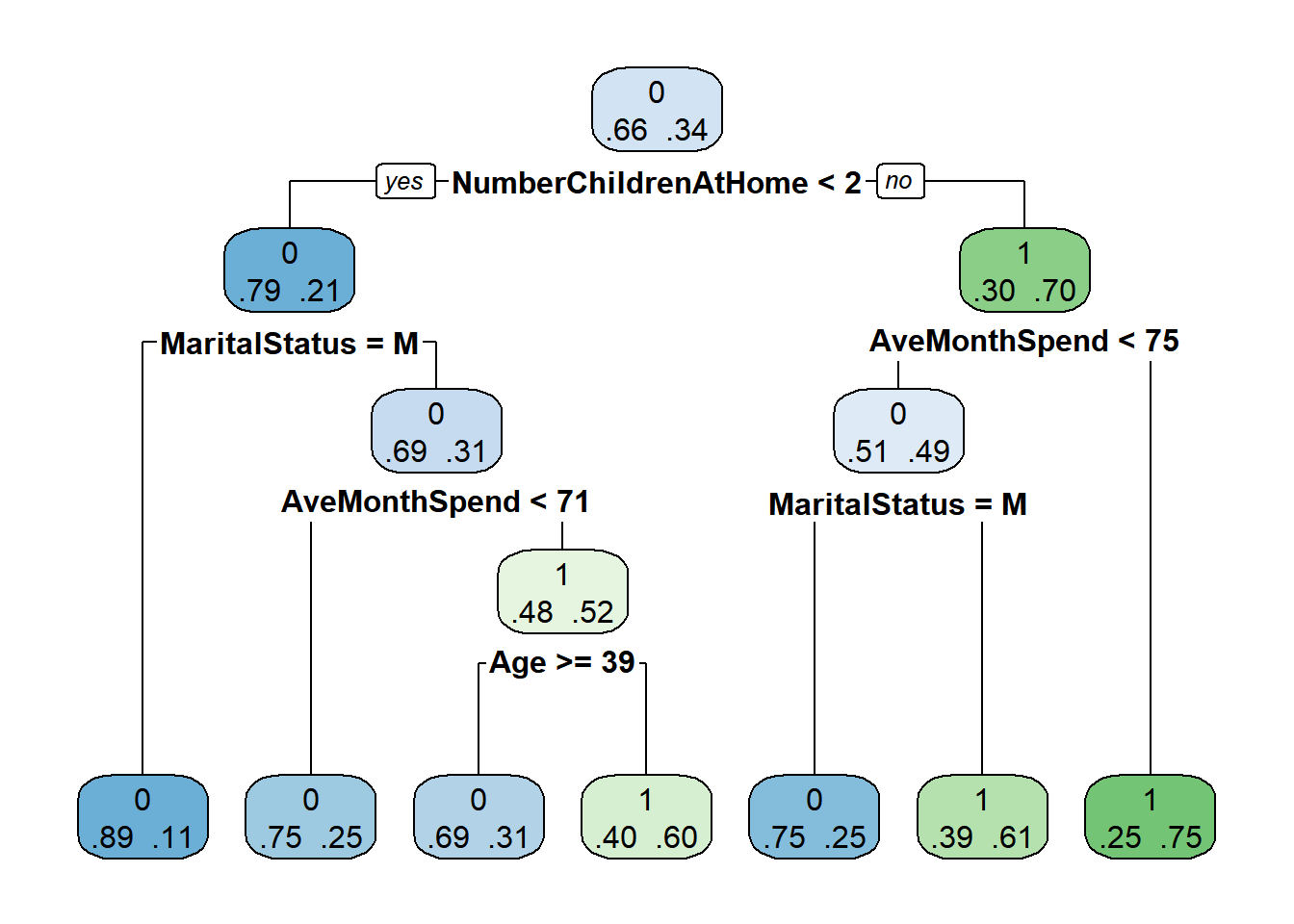

## 1) root 9778 3281 0 (0.6644508 0.3355492)

## 2) NumberChildrenAtHome< 1.5 7244 1512 0 (0.7912755 0.2087245)

## 4) MaritalStatus=M 3823 435 0 (0.8862150 0.1137850) *

## 5) MaritalStatus=S 3421 1077 0 (0.6851798 0.3148202)

## 10) AveMonthSpend< 70.5 2626 664 0 (0.7471439 0.2528561) *

## 11) AveMonthSpend>=70.5 795 382 1 (0.4805031 0.5194969)

## 22) Age>=39.43039 218 67 0 (0.6926606 0.3073394) *

## 23) Age< 39.43039 577 231 1 (0.4003466 0.5996534) *

## 3) NumberChildrenAtHome>=1.5 2534 765 1 (0.3018942 0.6981058)

## 6) AveMonthSpend< 74.5 535 263 0 (0.5084112 0.4915888)

## 12) MaritalStatus=M 182 46 0 (0.7472527 0.2527473) *

## 13) MaritalStatus=S 353 136 1 (0.3852691 0.6147309) *

## 7) AveMonthSpend>=74.5 1999 493 1 (0.2466233 0.7533767) *rpart.plot(tree, extra = 4) # extra = 4: probability of observations by class

printcp(tree) # stats of results##

## Classification tree:

## rpart(formula = BikeBuyer ~ ., data = trainData, method = "class")

##

## Variables actually used in tree construction:

## [1] Age AveMonthSpend MaritalStatus

## [4] NumberChildrenAtHome

##

## Root node error: 3281/9778 = 0.33555

##

## n= 9778

##

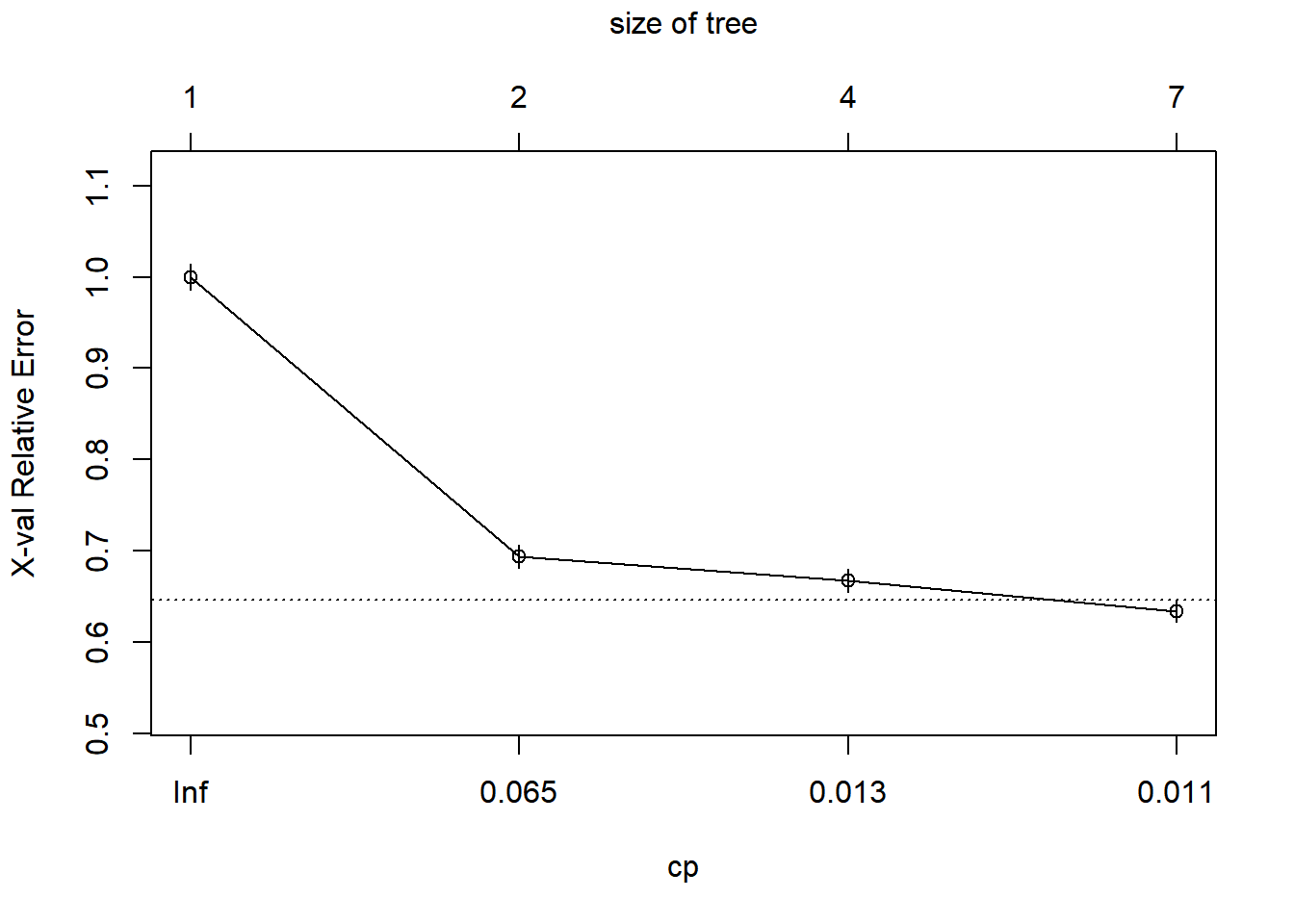

## CP nsplit rel error xerror xstd

## 1 0.306004 0 1.00000 1.00000 0.014231

## 2 0.013715 1 0.69400 0.69400 0.012738

## 3 0.011683 3 0.66657 0.66748 0.012565

## 4 0.010000 6 0.63151 0.63395 0.012334plotcp(tree) # evolution of error as the number of nodes increases

Here we see that N is the number of observations, 9,808. In the first line, we see that most observations/clients (66.8%) DIDN’T buy a bike (BikeBuyer = 0), and so on. For the company, it’s interesting to see that the person that will likely buy a bike the most is someone who is married, with more than 2 children at home.

VI. Pruning the tree

pruneTree <- prune(tree, cp = 0.01000) #Using the lowest CP from the printcp(tree) command above

printcp(pruneTree)##

## Classification tree:

## rpart(formula = BikeBuyer ~ ., data = trainData, method = "class")

##

## Variables actually used in tree construction:

## [1] Age AveMonthSpend MaritalStatus

## [4] NumberChildrenAtHome

##

## Root node error: 3281/9778 = 0.33555

##

## n= 9778

##

## CP nsplit rel error xerror xstd

## 1 0.306004 0 1.00000 1.00000 0.014231

## 2 0.013715 1 0.69400 0.69400 0.012738

## 3 0.011683 3 0.66657 0.66748 0.012565

## 4 0.010000 6 0.63151 0.63395 0.012334As you can see, printcp(pruneTree) and Tree is the same thus showing that the first command had already pruned (or didn’t) where it had to.

VII. Predict who will buy bikes on test data.

#We validate the ability of prediction of our prediction tree usin the test dataset

testTree <- predict(tree, newdata = testData, type = 'class')

#let's visualize the results with a matrix of confusion

table(testTree, testData$BikeBuyer)##

## testTree 0 1

## 0 3843 818

## 1 624 1358There we saw that our model predicted 4,024 people who were not buyers correctly, and 1051 who were did buy but we classifed them as not. Same, with the other row.

VIII. Evaluating the model.

## Let's calculate the % of correct answers

sum(testTree == testData$BikeBuyer) / length(testData$BikeBuyer)*100## [1] 78.29294it shows that we have 78.91% of accurate predictions!